Also, at the start of Function "q training update", we have "qnewstate". Recall that in Function "q training step", we save the values "qcurstate" and "qaction". Therefore, the further steps the reward is away from you, the more times you multiply gamma. Three steps away will give you 0.9 x 0.9 x 1 = 0.81. For example, if gamma is 0.9, two steps away to reward 1 will give you 0.9 x 1 = 0.9. You will still get the reward, but discounted. How about the next move does not lead to an immediate win, but with one more move you will win. For example, if the next move will give you the win, you will get the reward in full immediately. Gamma (or γ, the third Greek letter) is used to discount future rewards. If I find none, the maximum Q-Value is 0. I want to get the one that gives me the maximum Q-Value. In this Next_S state, there are many valid actions (or valid moves) inside the Q-Matrix. At this moment, the state of the game is changed to Next_S. At this moment, the state of the game is S and I pick action A.

Let's examine the items gamma, Next_S, and Next_A.įirst, let's discuss what are Next_S and Next_A. We will use this one in our further coding. New_Q = (1 - alpha) x Old_Q + alpha x gamma x Max ( Q ) On the other hand, when it is not the end game, "reward" is 0 and the complete formula can be reduced to. New_Q = (1 - alpha) x Old_Q + alpha x (reward + gamma x Max ( Q ) )ĭuring the end game, since there is no more move, the part in red is always 0. The complete formula should look like this, New_Q = (1 - alpha) x Old_Q + alpha x reward The secret is in the previous lesson, only half of the formula is shown.

Able to perform one complete training session.Understanding the meaning of gamma and epsilon.Learn the complete theory of Q-Learning.If you recall, we have catered for the case when "vm" is empty, meaning there is no more move for the player and the game has ended.īut how about during the mid-game, or the opening? How does the bot learn these in Q-Learning? In the last lesson, we started to update it.

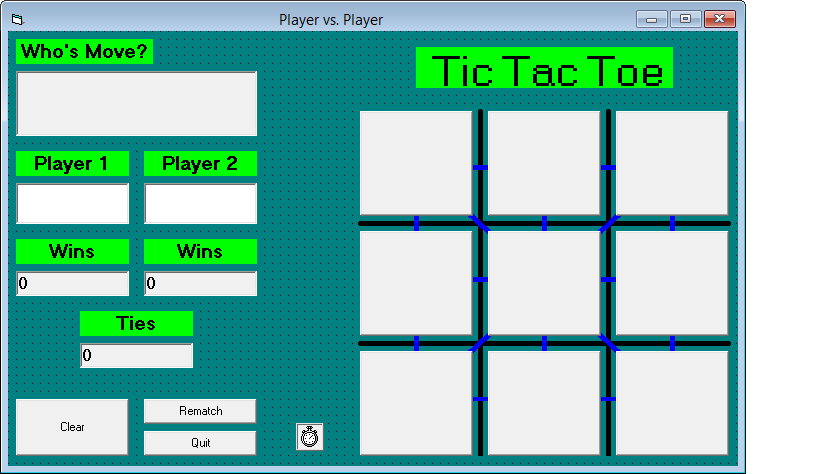

Q-Matrix is the 'brain' we eventually use to play tic-tac-toe. TIP: You can find the finished project of this lesson here. Then come back to understand it after you have run the training successfully. You can skip the theory and proceed to coding first. Build your own Tic-Tac-Toe game with Blockly and learn Reinforcement Learning (17/20) Lesson 17: Full Q-Matrix Updated

0 kommentar(er)

0 kommentar(er)